With the increasing amount of small devices we are using in everyday life, it is getting more difficult to keep track of the interfaces which these devices employ. People usually stick to one, and master it. Due to this, a lot of switching cost, usually time, is required for people to switch between devices that provide the same functions , but with different interfaces. Hence, any new device has to have an intuitive interface if it is to make it in the market.

With the advent of smartphones, less and less emphasis has been placed on gadgets that provide specialized functions. Utilising technology that has been growing smaller over the years, these phones are able to contain many functions within themselves, such as music playing and picture taking. The current boom of application creation that has been brought about by Apple giving APIs to users, and Google expanding it further with their open-source concept, is increasing the number of functions a smartphone has. With just a laptop, an application can be created to replace the television remote control, or to use the camera flash as a flashlight.

With so many functions embedded in one tiny devices, it certainly can be difficult to navigate. One example would be the phone company having created a menu of its own to allow easier navigation, when all it does is add to the confusion of having more items to look through. These 2 pictures below show the same smartphone tethering option in two different menus.

Having established that much confusion still exists in the navigation of interfaces in small devices, let us look at some of the ways that are being employed to help solve this.

First up is the famous (or infamous) Siri, known for executing tasks through voice commands and being able to comprehend Western accents more efficiently than others. This application helps tie all the other applications together through the use of a voice interpreter. In this manner, the user can navigate through the phone without having to actually press on any buttons. This, however, is not very comprehensive as it does not cover the manner of speech of non-Westerners, and has led to much frustration being posted on Youtube. Additionally, it seems to be unable to interpret some sentences correctly, or gives the same answers for some questions. This may interrupt the user's immersive experience by bringing him/her back to reality that (s)he is talking to a computer.

Next would be the use of cascading menus to help categorize different items. This approach has been long used in many operating systems. The only problem this would pose is the correct use of keywords and their consistency, while not having the same menu item more than once. Since correct use of words is varies from person to person, this approach is not a very intuitive one, and relies on the person's amount of use to master the navigation procedure.

Another method is the use of desktop-like pages to organise various icons which allow users to tell at a glance what applications there are without having to read the fine words. This method may require some customization on the part of the user, so it's not really easy to use at first.

While there are currently no fully functional and intuitive method of traversing content on portable small devices yet, it could be possible that voice control is the future, as it ties all the applications together and executes what the user says without requiring much effort on the user's part. So intuitive is this method that it was displayed in the film I,Robot when Susan Calvin attempts to turn on and off Detective Spooner's CD player she does it by voice initially. I believe the day when we can hold a conversation with our household devices is not too far off.

Human Computer Interaction

Friday, 28 October 2011

Wednesday, 26 October 2011

Computer interactivity using just the brain

The brain sensor equipment was an eye opener, since people seldom think of using brainwaves to do things. In fact, this is usually only seen in futuristic movies and the like. However, I have to say that I was not very surprised about it as I have heard about toys utilising such technology before. The presentation provided more insight into how these devices worked. Using four types of brainwaves and their levels, the computer is able to detect the user concentrating on something, and even blinking.

Some benefits of this device are:

The ability to detect brain activity without having to use a large non-portable scanning device. This means that for applications that do not need a high amount of resolution, this would be enough. Having this portability allows for people to set up the equipment easily, thus increasing the amount of time people spend using it. This also includes the ability to perform user studies and connect human emotions to actions. With this device, studies could be conducted to find out what the user is feeling at different points in time during an activity without requiring elaborate set-ups.

The ability to do things without having to move your hands, or the ability to multitask even further by adding at least more set of "hands" to the ones you already have. This would be useful for the disabled, since it would be able to tell what they are thinking without a need for them to fully express themselves in actions or words. Additionally, a skilled person would be able to do the work of 2 people by using his hand to move one set of controls, and his mind to move another set of controls.

However, there are still some disadvantages to this device.

The device might not be able to detect the user's brainwaves due to some interference as minor as hair, or some other anomaly. This would mean that it would be more difficult for some users to utilise it, which could be a turn off.

As hard as some people try, they might not be able to produce the desired waveforms to control things, which would be very frustrating. A solution to this would be to customize the software such that it matches the waveforms he desires to produce. However, this would require a database to store the information, and is also time-costly.

Current applications involve the user having to concentrate to turn a fan motor in order to raise a foam ball(MindFlex, Force Trainer). While these are just toys and may seem fun, they are actually unintuitive in that the user would try to keep thinking "rise" in an effort to concentrate and make the ball rise. This would mean that concentrating would only yield one application, and relaxing another. This would be due to a lack in the ability of the device to sense on a higher resolution what the user is actually thinking. Past experiments on a monkey has yielded results that allowed a robotic arm to move exactly the monkey's did. However, this experiment was intrusive, something that not many people would consent to.

Even though the device opens up new horizons into the world of human-computer interactivity, I feel that more research has to be done before it can be used to its fullest potential. Due to the difficulties involved in applying it to everyday uses, it is currently more useful as a data gathering device for researchers, or in criminology to find out if a suspect is lying.

Some benefits of this device are:

The ability to detect brain activity without having to use a large non-portable scanning device. This means that for applications that do not need a high amount of resolution, this would be enough. Having this portability allows for people to set up the equipment easily, thus increasing the amount of time people spend using it. This also includes the ability to perform user studies and connect human emotions to actions. With this device, studies could be conducted to find out what the user is feeling at different points in time during an activity without requiring elaborate set-ups.

The ability to do things without having to move your hands, or the ability to multitask even further by adding at least more set of "hands" to the ones you already have. This would be useful for the disabled, since it would be able to tell what they are thinking without a need for them to fully express themselves in actions or words. Additionally, a skilled person would be able to do the work of 2 people by using his hand to move one set of controls, and his mind to move another set of controls.

However, there are still some disadvantages to this device.

The device might not be able to detect the user's brainwaves due to some interference as minor as hair, or some other anomaly. This would mean that it would be more difficult for some users to utilise it, which could be a turn off.

As hard as some people try, they might not be able to produce the desired waveforms to control things, which would be very frustrating. A solution to this would be to customize the software such that it matches the waveforms he desires to produce. However, this would require a database to store the information, and is also time-costly.

Current applications involve the user having to concentrate to turn a fan motor in order to raise a foam ball(MindFlex, Force Trainer). While these are just toys and may seem fun, they are actually unintuitive in that the user would try to keep thinking "rise" in an effort to concentrate and make the ball rise. This would mean that concentrating would only yield one application, and relaxing another. This would be due to a lack in the ability of the device to sense on a higher resolution what the user is actually thinking. Past experiments on a monkey has yielded results that allowed a robotic arm to move exactly the monkey's did. However, this experiment was intrusive, something that not many people would consent to.

Even though the device opens up new horizons into the world of human-computer interactivity, I feel that more research has to be done before it can be used to its fullest potential. Due to the difficulties involved in applying it to everyday uses, it is currently more useful as a data gathering device for researchers, or in criminology to find out if a suspect is lying.

Thursday, 20 October 2011

Motion Sensing Outside of Entertainment or Games

First of all, a great thanks to EON Realiy for their informative and educational presentation. It opened our eyes to some of the other uses of motion sensing and enhanced visual technologies, that is outside of simply entertainment.

It was seen that motion sensing technology and enhanced visual such as 3D monitor and holographic display is very useful in serious industrial applications, such as simulation and training. Why would this be so?

Benefits:

1. Improving knowledge retention.

In philosophy, or psychology, there is this term called "qualia". Qualia simply means the raw experience of something. It is also studied that vivid qualia might be associated with enhanced memory retention. Hence, when training staff, instead of using training videos, or asking them to read a thick manual, a more vivid experience of the work itself will greatly improve their learning efficiency.

2. Reduces cost.

Next would be cost. It is definitely possible to allow the staff a realistic experience of training without simulation technology, by, say, allowing them to participate in the event itself. However, there is the issue of cost. We can't let doctors operate on real patients for practise, or training firemen on how to fight fire by starting fire on buildings. Using a realistic simulation process, we can greatly reduce the cost of simulation, yet achieving a near realistic experience.

Possible Problems:

Nevertheless, the current motion sensing + augmented reality technologies are still not quite yet mature, much improvements are still required:

1. The issue of fatigue with motion sensing.

Reading a manual, or watching a training video in air-con rooms are not going to cause much fatigue (falling into sleep out of boredom doesn't count). However, when interacting with the system using motion sensing, you have to move your body, exaggerated movement at times so that the system can register it. Doing so for a long time will cause quite an amount of physical exertion and would be not be able to be carried out over a long time. Hence, motion sensing could probably only used as a complementary mode of interaction, and not totally replacing other modes of interaction.

2. Issue of fatigue as well for 3D immersion technologies.

There is also a problem with 3D immersion technologies causing fatigue to the audience. This is mainly due to how stereoscopic 3D is being implemented. Stereoscopic 3D utilizes having 2 images in different view points, and showing to each of your eyes a different perspective to simulate depth perception. However, the problem with this is that now your brain has to absorb twice the amount of information, which inevitably will cause eye and mental fatigue if used for a long period of time.

Conclusion:

Nevertheless, this is definitely an area with high potential. With further improvements of such technologies in the future, such immersive method of interaction will definitely improve how we work, study and play in the future. To end off, let us show a video on Cisco's teleprescence technology to give us a glimpse into how immersive interaction in the future could be:

It was seen that motion sensing technology and enhanced visual such as 3D monitor and holographic display is very useful in serious industrial applications, such as simulation and training. Why would this be so?

Benefits:

1. Improving knowledge retention.

In philosophy, or psychology, there is this term called "qualia". Qualia simply means the raw experience of something. It is also studied that vivid qualia might be associated with enhanced memory retention. Hence, when training staff, instead of using training videos, or asking them to read a thick manual, a more vivid experience of the work itself will greatly improve their learning efficiency.

2. Reduces cost.

Next would be cost. It is definitely possible to allow the staff a realistic experience of training without simulation technology, by, say, allowing them to participate in the event itself. However, there is the issue of cost. We can't let doctors operate on real patients for practise, or training firemen on how to fight fire by starting fire on buildings. Using a realistic simulation process, we can greatly reduce the cost of simulation, yet achieving a near realistic experience.

Possible Problems:

Nevertheless, the current motion sensing + augmented reality technologies are still not quite yet mature, much improvements are still required:

1. The issue of fatigue with motion sensing.

Reading a manual, or watching a training video in air-con rooms are not going to cause much fatigue (falling into sleep out of boredom doesn't count). However, when interacting with the system using motion sensing, you have to move your body, exaggerated movement at times so that the system can register it. Doing so for a long time will cause quite an amount of physical exertion and would be not be able to be carried out over a long time. Hence, motion sensing could probably only used as a complementary mode of interaction, and not totally replacing other modes of interaction.

2. Issue of fatigue as well for 3D immersion technologies.

There is also a problem with 3D immersion technologies causing fatigue to the audience. This is mainly due to how stereoscopic 3D is being implemented. Stereoscopic 3D utilizes having 2 images in different view points, and showing to each of your eyes a different perspective to simulate depth perception. However, the problem with this is that now your brain has to absorb twice the amount of information, which inevitably will cause eye and mental fatigue if used for a long period of time.

Conclusion:

Nevertheless, this is definitely an area with high potential. With further improvements of such technologies in the future, such immersive method of interaction will definitely improve how we work, study and play in the future. To end off, let us show a video on Cisco's teleprescence technology to give us a glimpse into how immersive interaction in the future could be:

Thursday, 6 October 2011

Function & Fashion

In HCI, there is a great emphasis on the balance of Fashion with function for the development of interactive designs. It is important to be descriptive and precise but also not be too difficult or detailed so that the novice users can adopt and use the system efficiently. Here are 2 areas where there can be improvements and a balance can be achieved, namely the error messages and nonanthropomorphic design:

Error messages

Why do errors occur? Lack of knowledge, incorrect understanding, inadequate slips cause them. However, it is important for the developer to make error messages as user-friendly as possible; this is especially important for novice users as they commonly have a lack of knowledge, confidence, and are sometimes easily frustrated or discouraged. This way everyone, be it novice or experts will know what exactly to do to fix the error.

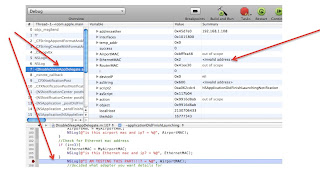

Another way to assist the user is to measure where errors occur the most and then using this data work on making error messages for these more suitable and offer simple solutions. For e.g in Xcode when there is an memory leakage, the compiler used to simply give an error

"Program received signal: “EXC_BAD_ACCESS”. However, after a version upgrade Apple Inc added the ability to see where exactly the issue was as shown in this picture:

This way the error is clear and pin pointed to exactly where the issue is.

A further reading and more information about error messages can be found here

Nonanthropomorphic Design

Deals with conversational messages between humans and computers. People feel less responsible for their actions/performance if they interact with an anthropomorphic interface.anthropomorphism means attributing of human qualities to a non human entity.

Here are some Guidelines

Be cautious in presenting computers as people, either with synthesized or cartoon characters

Use appropriate humans for audio or video introductions or guides

Use cartoon characters in games or children’s software, but usually not elsewhere

Provide user-centered overviews for orientation and closure

Do not use “I” when the computer response to human action

Use “you” to guide users, or just state facts

Overall, one must make the design and interaction user friendly.Here is a good video from Don Norman with regards to Function and Fashion. Hope this post was helpful!

Error messages

Why do errors occur? Lack of knowledge, incorrect understanding, inadequate slips cause them. However, it is important for the developer to make error messages as user-friendly as possible; this is especially important for novice users as they commonly have a lack of knowledge, confidence, and are sometimes easily frustrated or discouraged. This way everyone, be it novice or experts will know what exactly to do to fix the error.

Another way to assist the user is to measure where errors occur the most and then using this data work on making error messages for these more suitable and offer simple solutions. For e.g in Xcode when there is an memory leakage, the compiler used to simply give an error

"Program received signal: “EXC_BAD_ACCESS”. However, after a version upgrade Apple Inc added the ability to see where exactly the issue was as shown in this picture:

This way the error is clear and pin pointed to exactly where the issue is.

A further reading and more information about error messages can be found here

Nonanthropomorphic Design

Deals with conversational messages between humans and computers. People feel less responsible for their actions/performance if they interact with an anthropomorphic interface.anthropomorphism means attributing of human qualities to a non human entity.

Here are some Guidelines

Be cautious in presenting computers as people, either with synthesized or cartoon characters

Use appropriate humans for audio or video introductions or guides

Use cartoon characters in games or children’s software, but usually not elsewhere

Provide user-centered overviews for orientation and closure

Do not use “I” when the computer response to human action

Use “you” to guide users, or just state facts

Overall, one must make the design and interaction user friendly.Here is a good video from Don Norman with regards to Function and Fashion. Hope this post was helpful!

Friday, 30 September 2011

Faceted Exploratory Search vs Faceted Metadata Search

As we have discussed in lecture today, about what could be the difference between Faceted Exploratory Search and Faceted Metadata Search, we did some research on this.

For Faceted Exploratory Search, in a paper by Markus Ueberall, it is implied that Faceted exploratory search is a combination of faceted method of tagging and classification with exploratory method of dynamically providing different user with different set of data. Hence, the result is that the user query will be an individualized result, that is classified into facets with tags and classifications for easy browsing.

This is usually done for cases where the results returned from exploratory search could be large, and the faceted method is hence used as a complementing feature to allow the user to arrive at their target even more efficiently.

For Faceted Exploratory Search, in a paper by Markus Ueberall, it is implied that Faceted exploratory search is a combination of faceted method of tagging and classification with exploratory method of dynamically providing different user with different set of data. Hence, the result is that the user query will be an individualized result, that is classified into facets with tags and classifications for easy browsing.

This is usually done for cases where the results returned from exploratory search could be large, and the faceted method is hence used as a complementing feature to allow the user to arrive at their target even more efficiently.

Friday, 9 September 2011

Project update!!

We have decided for our project that we will be doing a marathon planner. Why? Because it seems like a pain in the a** to actually plan a marathon. I think it would be great if marathon planners found this wonderful to use, and then all of a sudden 10 times more marathons appear all over the world. Right.

Well, the main reasons actually are:

1. There are no existing products that fulfil this role on the market currently.

2. There is an increasing popularity with healthy lifestyle and could mean an increase in demand for such events.

Affectionately called the Plan-a-Thon, the application will be web-based, created in Flash. It will help automate some processes while giving a clear list of things for the planner to do.

Some sample drawings are included below. Please pardon the lousy art.

The finalists are the 1st and 2nd sketch. We are kind of deciding on which one to choose.

The 2nd one makes the process more procedural, where the user follows a fixed procedure to plan for the marathon, like making payments online, this will probably be easier for people new to planning marathons.

The 1st option is more functional based, where each icon consists of a collection of function that would be needed in planning a marathon, this would probably be a lot easier for more experienced planners. There is a to-do list for the 1st sketch to help the new planners as well.

We are probably leaning towards the 1st sketch, but we will get in some survey data on our user base before we decide.

Well, the main reasons actually are:

1. There are no existing products that fulfil this role on the market currently.

2. There is an increasing popularity with healthy lifestyle and could mean an increase in demand for such events.

Affectionately called the Plan-a-Thon, the application will be web-based, created in Flash. It will help automate some processes while giving a clear list of things for the planner to do.

Some sample drawings are included below. Please pardon the lousy art.

The finalists are the 1st and 2nd sketch. We are kind of deciding on which one to choose.

The 2nd one makes the process more procedural, where the user follows a fixed procedure to plan for the marathon, like making payments online, this will probably be easier for people new to planning marathons.

The 1st option is more functional based, where each icon consists of a collection of function that would be needed in planning a marathon, this would probably be a lot easier for more experienced planners. There is a to-do list for the 1st sketch to help the new planners as well.

We are probably leaning towards the 1st sketch, but we will get in some survey data on our user base before we decide.

Tuesday, 6 September 2011

Heuristics to review a device? What about Activity Theory?

According to activity theory, humans are driven by goals to perform required actions. Being information processing entities, they are able to ascertain a set of goals which become needs, and thus lead to humans having attraction towards certain objects. Activity theory offers a set of perspectives on human activity and a set of concepts for describing that activity. The main aim of activity theory is to understand the reasons behind an action, and describes these reasons, rather than predict them. (http://www.ics.uci.edu/~corps/phaseii/nardi-ch1.pdf)

A method using activity theory to provide expert reviews for human-computer interactive devices has been created, which is called the Activity Walkthrough. The tasks to be done are first identified. The activities of the users are then oriented to the tasks the system can perform. The tasks the system can perform are verified to see their usefulness. Next, each task is broken down into specific low-level steps. The tasks are then carried out unless they are irrelevant. Finally, the data is gathered and reviewed critically. More on this method can be found in this literature review: http://delivery.acm.org/10.1145/1030000/1028052/p251-bertelsen.pdf?ip=137.132.250.14&CFID=41023923&CFTOKEN=63499383&__acm__=1315374265_ec50c1ecb079abe586c7aa8145a25edb

A method using activity theory to provide expert reviews for human-computer interactive devices has been created, which is called the Activity Walkthrough. The tasks to be done are first identified. The activities of the users are then oriented to the tasks the system can perform. The tasks the system can perform are verified to see their usefulness. Next, each task is broken down into specific low-level steps. The tasks are then carried out unless they are irrelevant. Finally, the data is gathered and reviewed critically. More on this method can be found in this literature review: http://delivery.acm.org/10.1145/1030000/1028052/p251-bertelsen.pdf?ip=137.132.250.14&CFID=41023923&CFTOKEN=63499383&__acm__=1315374265_ec50c1ecb079abe586c7aa8145a25edb

Subscribe to:

Comments (Atom)